Two years ago, we wrote a small primer about quantum computing. Recently, a paper was leaked describing a quantum computing breakthrough by Alphabet [NASDAQ: GOOG] — prompting a flurry of media stories about what this development might mean. We reprint our description to help you understand what quantum computing is — and update it with news about GOOG’s breakthrough and what it might imply for Bitcoin and other cryptocurrencies.

Quantum Computing, Industrial Revolutions, and the Death of Moore’s Law

Quantum computing is a classic case of the dilemma that confronts investors who like to monitor long-term trends and themes in technology and society. Even now, quantum computing is not an investable theme. The companies now doing the most work on it are too big for any significant effect to make it to their bottom line within a reasonable time horizon for most retail investors. The companies that will be the must-own winning investments in the future probably don’t exist yet. But still, investors have to pay attention. The world is on the cusp of disruption by a set of technologies that will mark a new inflection point in modern industrial history. At the nexus of all those technologies sits quantum computing.

You’re probably familiar with “Moore’s Law” — the principle first stated by Intel [NASDAQ: INTC] co-founder Gordon Moore in 1965 which described the exponential pace of increasing computing power and its declining cost. Over the past half century, power (the density of transistors on an integrated circuit) has doubled, and price halved, about every two years.

The transformation of the modern world has been driven, so far, by three phases of industrialization. First, starting in the late 18th and early 19th centuries, came steam power and railroads. Next, starting in the 1870s, came oil, chemicals, automobiles, electrification, and mass production. And most recently, starting in the 1940s, came computers, digitization, microprocessors, and ultimately the internet. Each of these phases dramatically accelerated the pace of technological and social change: perhaps we could call them the Age of Steam, the Age of the Automobile, and the Digital Age.

If there is a single engine powering the Digital Age, it has been Moore’s Law. Observations like the following are commonplace, but bear repeating: the cell-phone in your pocket has more computing power than a Cray-2 — the 1985 supercomputer whose liquid cooling system captured the public imagination, and which cost $39 million in 2019 dollars.

The exponential rise of cheap computing power has given us a world now on the cusp of functional artificial intelligence and computers that can program themselves and acquire, interpret, and use data at a superhuman and exponentially accelerating pace. Almost any technological innovation you read about in daily media was enabled by the advances of the Digital Age. In the past few weeks, for example, new gene-editing technology has been on the front page (although of course we had written about it in 2015). This technology would have been impossible without the sequencing of the human genome that the Digital Age, and Moore’s Law, delivered. Only a decade ago, two Ivy League economists, Frank Levy and Richard Murnane, could casually refer to driving a car on a busy street as the kind of task that computers could never master; not only is that impossible task already being done, but investors are discussing how it will transform the bottom line of companies such as Alphabet [NASDAQ: GOOG].

But as analysts and industry spokespeople increasingly recognize, Moore’s Law is coming to an end. The Semiconductor Industry Associations of the U.S., Europe, Japan, South Korea, and Taiwan have put out an annual chip-technology forecast for the last 25 years, based on Moore’s Law — and they announced last year that the report for 2015 would be the last. Chip architectures are coming up against hard physical limits as they approach the atomic level.

(There are still some prominent cheerleaders for Moore’s Law; INTC’s former CEO, Brian Krzanich, wrote that he had “witnessed the advertised death of Moore’s Law no less than four times” during his career. Other executives and analysts observe that if INTC really had the secret to keep Moore’s Law going, they would be doing better at getting their presumably faster and cheaper chips into mobile devices.)

Moore’s Law, then, brought us to the threshold of a new stage in the saga of the world’s transformation by technology: the stage whose first signs include universal connectivity, the internet of things, big data, artificial neural networks, and machine learning. Futurists are glimpsing the world that we’ve discussed in these pages before — a world where self-creating and self-programming computers take humans out of the loop as they unshackle innovation from the cognitive limitations of their flesh-bound creators. And just at this threshold, Moore’s Law is faltering.

What’s Coming Next — Fear and Hope

We predict that you will begin to hear more about “the end of Moore’s Law.” It is a theme that could easily be taken up alongside other current pessimistic themes — for example, that technology and demographics are setting up a deflationary future that will result in permanently lowered prospects for economic growth in much of the developed and developing worlds, or that automation will result in a permanently unemployed underclass that will have to be pacified with a universal basic income (basic income and the internet — the modern “bread and circuses”).

The same sorts of pessimism and anxiety have greeted each phase of the modern world’s path of industrial development. This kind of reaction seems to be an inevitable component of society’s adjustment to the new world that a technological revolution is ushering in. The next phase, which will likely be known as the Age of Artificial Intelligence, will be no different. Investors who allow themselves to be persuaded by pessimistic arguments are simply going to miss out on the most powerful technological, social, and economic trends.

So here’s our take on why the death of Moore’s Law may simply mark the next acceleration of technological transformation, and how Moore’s Law may in fact be reborn with the Age of Artificial Intelligence.

The answer is quantum computing. (If this sounds too much like science fiction, remember: self-driving cars were still science fiction in 2005.)

Quantum Computing and the Rebirth of Moore’s Law

We’ve discussed quantum computing here before. There’s a reason we’re mentioning it again now, which we’ll discuss below, but first here’s a brief refresher.

A classical computer stores data as “bits,” with each bit being represented by a zero or a one. A quantum computer exploits a property of quantum mechanics called “superposition,” in which a given particle may be not just in one state or another, but in both simultaneously. Thus a quantum bit, or “qubit,” may be a zero, a one, or a superposition of both states. The upshot of this is that a classical computer with n bits can be in any one of 2n different states. But a quantum computer can be in all of those states simultaneously. So as the number of qubits increases, a quantum computer’s superiority would increase exponentially.

The hurdles to the arrival of “quantum supremacy” are technical. In order to remain in a superposed state, a qubit must be completely isolated from interaction with the surrounding world. In practical terms, that means that most quantum computing models have to be kept at the temperature of intergalactic space. Critics of quantum computation, including those who once maintained, incorrectly, that it would simply be impossible, focus on the problems caused by “noise” — the random results that can occur because a system is imperfectly insulated from its surroundings.

Alphabet Demonstrates Quantum Supremacy

Recently a paper was leaked in which a team of GOOG researchers used a 53-qubit quantum computer to demonstrate quantum supremacy — that is, their device successfully solved a problem that would have taken a classical computer many orders of magnitude longer to solve. (In this case, the quantum machine took 200 seconds to solve a problem that would have taken a classical computer 10,000 years to solve.) The problem itself was a rather artificial and arbitrary construct — not something that was intrinsically useful or interesting. The point was to demonstrate a principle — not to solve a problem. (Readers interested in the details of the problem, and how the solution could even be checked for accuracy, can read this excellent article by computer scientist Scott Aronson.)

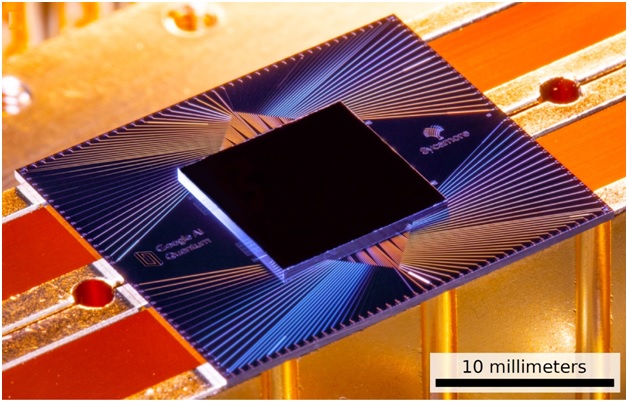

GOOG’s Sycamore Quantum Computing Chip

Source: NASA

What It Means For Bitcoin and Cryptos

Does this mean that investable, transformative quantum computing is suddenly right around the corner? Probably not. This is something like the first flight of the Wright Flyer in 1903, rather than the first transatlantic commercial jet flight in 1958. Many analysts (including us) have written about the risks posed by quantum computing to Bitcoin and other cryptocurrencies which rely on all current data encryption technologies. Back in 2017, as Bitcoin was accelerating into its meteoric rise, we wrote:

“Both of the central pillars of digital currencies — cryptographic identity and blockchain construction — rely on mathematical problems. Those problems need to be either impossible (in the case of cryptographic identity) or extremely difficult, time-consuming, and costly (in the case of blockchain construction). With current computer technology, all is well: the unsolvable is unsolvable, and the extremely difficult is extremely difficult. But there’s a new technology coming: quantum computing. The inflection in computing power that quantum computing inaugurates could quite possibly break all existing cryptocurrencies. Indeed, it could/will break all existing cryptography.”

So does the new GOOG achievement mean that moment is here? Nope. As Aronson notes, GOOG’s 53-qubit computer would need to be a computer with millions of qubits in order to crack the toughest current encryption technologies — and we’re not yet close to that. This development is just a warning shot, and not a sign that the internet’s data security is about to be catastrophically compromised.

Investment implications: Quantum computing is not yet an investable theme for retail investors. The major public companies developing the technology may never see direct benefits from it that significantly impact their bottom line. The successful small companies that will rise to future prominence probably do not yet exist. Still, investors should watch the trend so that they will be aware of it when it does become investable, and when its effects will become disruptive to established industries and technologies. Please note that principals of Guild Investment Management, Inc. (“Guild”) and/or Guild’s clients may at any time own any of the stocks mentioned in this article, and may sell them at any time. Currently, Guild’s clients own INTC and GOOG. In addition, for investment advisory clients of Guild, please check with Guild prior to taking positions in any of the companies mentioned in this article, since Guild may not believe that particular stock is right for the client, either because Guild has already taken a position in that stock for the client or for other reasons.